Pioneers of computational possibilities

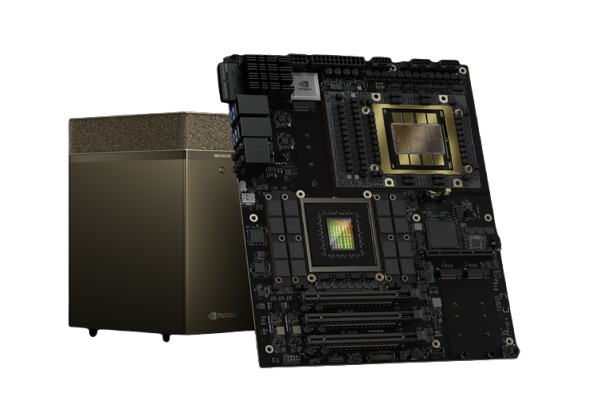

For more than 25 years, NVIDIA has pioneered visual computing solutions to tackle challenges ordinary computers cannot. NVIDIA delivers high-performance GPU solutions for modern workloads, such as real-time rendering, high-performance computing, virtual desktops and artificial intelligence. GPU accelerated computing opens up enormous new market opportunities and enables problem solving at remarkable speeds.

Certified Expertise with Nextron

Nextron can offer the full range of NIVIDA’s professional products and we are certified NVIDIA Partner in the following areas:

Compute

Artificial Intelligence, high performance computing and using GPUs to accelerating computing task in general.

Virtualization

Allowing GPU resources to be shared by many simultaneous users, for example in virtual desktop environments.

Visualization

Using GPUs to show life like graphics in professional environments, such as engineering, video editing and virtual reality.

Networking

Accelerated networking solutions offer the choice of InfiniBand and Ethernet, giving enterprises the infrastructure that supports develop-to-deploy implementations across all modern workloads and storage requirements.

With our expertise we can help you find the optimal solutions for your workload whether you need a single card, a general GPU server or a specialized AI solution. Our fully trained technicians can assist with on-site installation if needed.